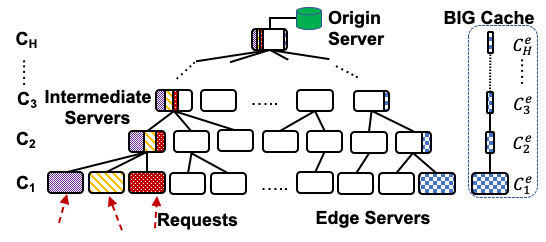

Large content service providers such as Google/YouTube and Netflix employ one or multiple Content Distribution Networks (CDNs) to deliver content to users in a scalable and timely manner. These CDNs are often organized in a hierarchical structure with multiple tiers of geographically dispersed content servers, where the lowest tier functions as edge servers (closest to users) and origin servers lie at the top of the hierarchy. This allows an edge server to directly route requests through a sequence of intermediate cache servers towards the origin server to find a copy of the requested content. However, when these cache servers act independently they lead to the problem of thrashing, where objects are cached and then quickly evicted before the arrival of their next request, significantly reducing the cache efficiency due to cache under-utilization.

Hence, we advocate the notion of one “BIG” cache as an innovative abstraction, where each of the intermediate cache servers allots a portion of its cache capacity to serve user requests received by an edge server; but instead of treating them as separate caches, we view them collectively as if these cache pieces were “glued” together to form one “BIG” (virtual) cache as shown in figure 1. Hence, objects may move between the boundaries of the constituent cache pieces as their access rates increase or decrease. As a result, we can fully utilize the allotted cache space at all (intermediate) cache servers along the path from the edge server to the origin server, yielding much higher overall hit rates, thereby reducing the origin server load as well as the network bandwidth demand.

“BIG” cache abstraction allows any existing cache replacement strategy such as LRU, FIFO, k-HIT, k-LRU to be applied as a single consistent strategy to the entire (virtual) cache. Our evaluation of “BIG” cache abstraction demonstrates its efficacy over existing methods in a hierarchical tree caching structure. We also develop an optimization framework for cache management under “BIG” cache abstraction to maximize the overall utility of a cache network. Finally, we propose a novel and general framework for evaluating caching policies in a hierarchical network of caches as an added benefit of “BIG” cache abstraction. More details about this work can be found in the publications listed below.

Publications

-

Performance Estimation and Evaluation Framework for Caching Policies in Hierarchical Caches.

Eman Ramadan, Pariya Babaie, and Zhi-Li Zhang. Computer Communications, Volume 144, 2019. -

Cache Network Management Using BIG Cache Abstraction.

Pariya Babaie, Eman Ramadan, and Zhi-Li Zhang. IEEE Conference on Computer Communications INFOCOM (INFOCOM'19), 2019. -

A Framework for Evaluating Caching Policies in a Hierarchical Network of Caches.

Eman Ramadan, Pariya Babaie, and Zhi-Li Zhang. IFIP Networking Conference and Workshops (IFIP Networking'18), 2018. -

BIG Cache Abstraction for Cache Networks.

Eman Ramadan, Arvind Narayanan, Zhi-Li Zhang, Runhui Li, and Gong Zhang. IEEE International Conference on Distributed Computing Systems (ICDCS'17), 2017.

Supplementary Materials

- Presentation Slides for INFOCOM'19 Paper

- Presentation Slides for IFIP Networking'18 Paper

- Presentation Slides for ICDCS'17 Paper